AI Agents & Skills, Plus What Games Can Teach us About Adoption

Some useful signals of enterprise AI adoption are emerging - how can agentic AI and agent skills take this to the next level?

Enterprise AI Adoption Signs of Life

There are some promising signals emerging around enterprise AI adoption and its impact on companies who are using it.

Last month’s Accountable Acceleration: Gen AI Fast-Tracks Into The Enterprise report by Wharton Human-AI Research And GBK Collective, labelled 2025 as the Accountable Acceleration stage of adoption, finding that:

82% use Gen AI at least weekly and 46% daily, and 89% agree it enhances employees’ skills; but as usage climbs, 43% see a risk of declines in skill proficiency.

72% are formally measuring Gen AI ROI, focusing on productivity gains and incremental profit, and three out of four leaders are seeing positive returns on their Gen AI investments.

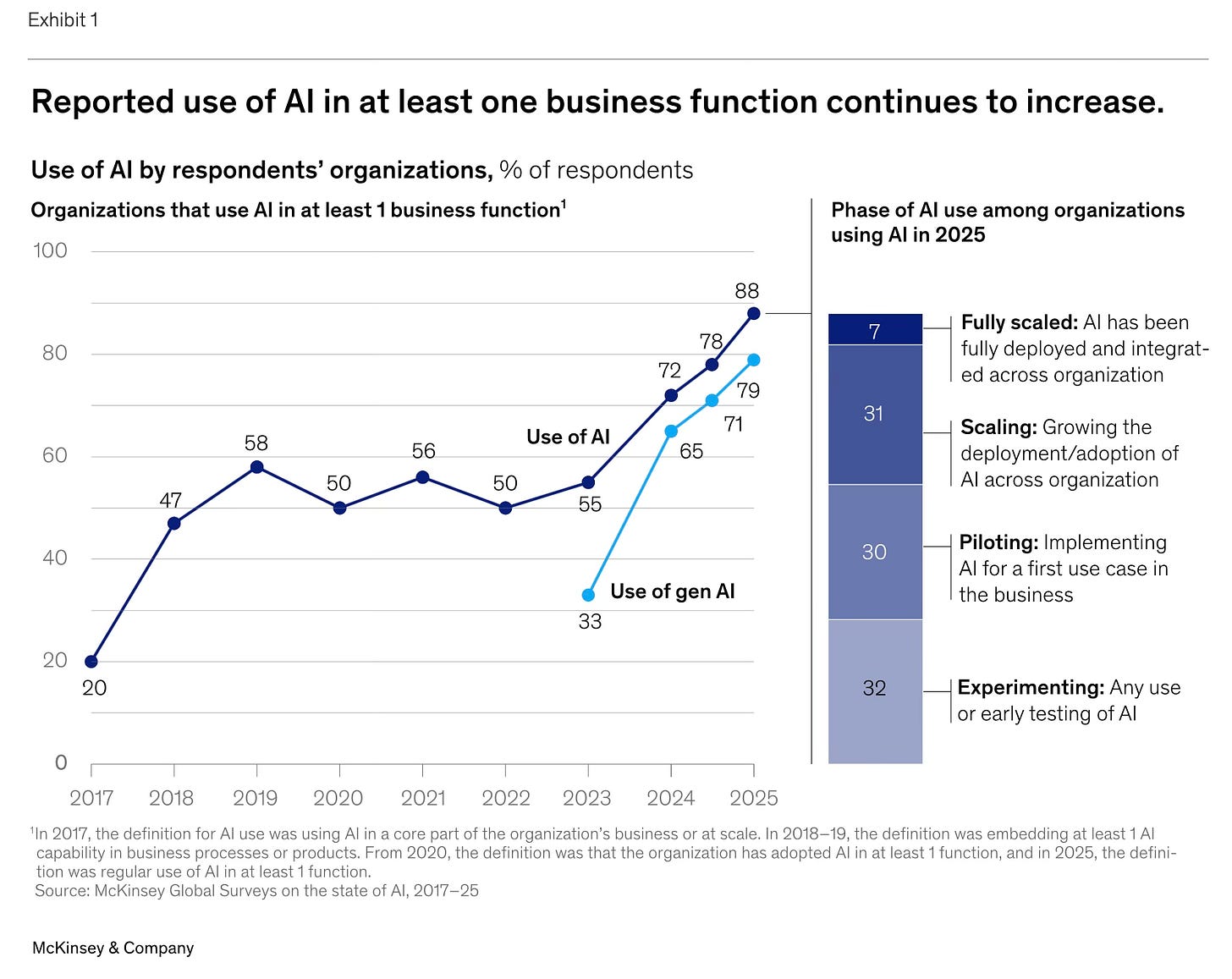

Last week, McKinsey released a new report The state of AI in 2025: Agents, innovation, and transformation, which found that while most firms are still in the pilot phase with Agentic AI and not yet scaling up, there is growing interest in the topic. In terms of the adoption impact of AI in general, the report found:

use-case-level cost and revenue benefits, with 39% of respondents reporting EBIT impact;

a majority (64%) of respondents say that AI is enabling innovation; and,

half of the AI high performers plan to use AI for business transformation, and many are already redesigning workflows to achieve this.

This last point is important, because most of the biggest potential returns on AI investment will come from using it to transform operations, rather than just make existing processes more efficient. But this is harder said than done, as speakers at Celonis’s recent conference made clear, sharing their own experience of using process intelligence and mapping to create the conditions where enterprise AI can thrive.

KPMG also recently shared some insights into their own use of Google Gemini, both internally and with clients, to advance their agentic AI agenda, as reported by Constellation Research:

Stephen Chase, Global Head of AI & Digital Innovation at KPMG, said the firm adopted Gemini Enterprise across the workforce with 90% of employees accessing the system within two weeks of launch. “We believe this is the fastest adopted technology our firm has had and we are in a regulated industry,” said Chase. “We went into it with the idea this was going to be part of our overall transformation. It was never about individual use cases. It was about sparking innovation.”

From talking to some of the people advancing agentic AI within KPMG and other leading professional services firms recently, it is clear they see a huge opportunity for business transformation here, and they are very committed to pursuing it in a systematic way by focusing on building blocks, architecture and re-use, not just stand-alone uses cases and apps.

Agents are Getting Easier

Whilst it is true that mapping and re-engineering existing processes and workflows can be a complex undertaking within large organisations, once this hurdle is cleared, it is becoming easier and cheaper to create powerful agentic AI capabilities on top.

Long-term, as we have written previously, enterprises might be able to benefit from more control, customisation and security by using smaller models, perhaps even running in their own infrastructure. As Seth Dobrin from the Silicon Sands newsletter put it recently:

A business is not a general-purpose entity, so why should it expect to get value from a general-purpose model? This is the fundamental question that the AI industry is finally being forced to confront. The answer is simple: it shouldn’t. The actual value of AI in a business context lies not in its ability to do everything, but in its capacity to do specific things exceptionally well. This is where SLMs excel.

The recent excitement around Kimi K2 ‘Thinking’ and other open models suggests that it is possible to train powerful AI models for orders of magnitude less cost compared to the leading platform models, and calls into question the current strategy of consuming ever more compute resources to deliver marginal improvements in function.

But it also suggests that many businesses should be at least thinking about owning and adapting small models for some purposes. Perhaps we don’t need big, all-powerful general purpose models for most of what we do in the enterprise, but also they might lack the specific nitty gritty detail of a particular knowledge domain or business sector.

However, even building agentic AI on general models, it is becoming easier and more realistic to adapt and guide them according to our specific needs, use cases and rules of the road, and the launch of Claude Skills by Anthropic last month could be a major proof point for this approach.

Skills Could be a Game-Changer

Michael Spencer believes Claude Skills will enable Anthropic to overtake OpenAI in the enterprise market, by simplifying the process of teaching and guiding LLMs to perform specific tasks in ways that suit a particular organisation or function.

Anthropic overtakes OpenAI in ARR either in 2027 or 2028, and the reason is the utility it is providing Enterprise customers and businesses all over the world. On October 16th, 2025 [Anthropic] announced Claude Skills.

Leading AI expert Simon Willison is also very bullish about Claude Skills, and his overview of what they can do is a good primer on the topic, albeit mostly from a coding perspective. In particular, he highlights the simplicity and token-efficiency of this approach to customising the work of agents to suit local needs:

There’s one extra detail that makes this a feature, not just a bunch of files on disk. At the start of a session Claude’s various harnesses can scan all available skill files and read a short explanation for each one from the frontmatter YAML in the Markdown file. This is very token efficient: each skill only takes up a few dozen extra tokens, with the full details only loaded in should the user request a task that the skill can help solve.

Although developers will be a major target audience for Skills, it is worth remembering that skills and sub-skills can be built and adapted using plain language prompting, which means it is even more important for people and teams to codify the way they work, because they can now make these guidelines available to agents to improve their outputs.

Matteo Cellini has written about how important this approach could be in making work - and organisations - more programmable, with a useful guide to getting started with some simple work skills:

One of the biggest shortcomings of AI Agents at the moment, is that even though they can reason, summarize, even code — they are still like a bright interns without process memory. Ask to reconcile accounts, prepare an ESG report, or validate an invoice, and they’ll improvise every time. Anthropic’s new Agent Skills framework promises to give them exactly that — modular micro-expertise that can be shared, reused, and improved.

Adoption Lessons from Game Design

Modular, micro-expertise that can be shared, reused and improved is a good description of what makes agentic AI so interesting. Providing access to powerful, general AI capabilities can work well for some people, but widespread adoption into peoples’ daily work usually needs a better connection between capability and need.

As Ben Lorica writes for O’Reilly Radar, tackling small, specific pain points and use cases is a good approach to AI adoption in the enterprise more broadly, at least as a counter-point to the roll-out of general AI capabilities that people do not always know how to adsorb into their daily work:

The [AI] teams who succeed won’t be those chasing the most advanced models. They’ll be the ones who start with a single Hero user’s problem, capture unique data through a focused agent, and relentlessly expand from that beachhead. In an era where employees are already voting with their personal ChatGPT accounts, the opportunity isn’t to build the perfect enterprise AI platform—it’s to solve one real problem so well that everything else follows.

This is an area of behavioural science where games and world-building can teach us a lot.

First, as we have seen with the first general wave of chatbots, giving people a blank text box and telling them they can do or ask anything is far less effective than you might imagine, and quickly leads to disappointment and disengagement as its limitations become painfully clear.

In games, where very basic (NPC) character AIs have been used for many years, designers know how to use dialogue design and context setting to signal to players that a shop-keeper just sells materials, or a quest giver will tell you their story and ask for help. Many games that seem open world are in fact driven by behavioural corridors that guide you where you need to go to avoid frustration.

In enterprise AI, the majority of agents will not be voice- or chat-activated, but will work together, triggered by API / MCP calls, for example, to perform tasks. But some will still talk to you. How can we avoid them wasting your time or setting expectations so broad that they tend to disappoint?

There has been a lot of progress in AI voice interfaces, and I still marvel at what tools like ChatGPT can do for me by interpreting my rambling voice prompts when I am on the move. But I absolutely cannot stand its patronising and obsequious voice responses, so I take my output in text and with a pinch of salt.

If we expect to see more ambient AI interaction using conversational interfaces, then we probably need to start thinking about character development for different use cases and user groups.

Nathan Lambert has shared an interesting new thread of research into character training that I found intriguing. Some of this can be achieved very simply using prompts or skills that guide an agent how to respond to people, but more advanced approaches might require human-supervised fine tuning of the models themselves.

And of course there are lots of other lessons to be studied about how an increasingly agentic digital workplace can engage people, guide them where they need to go, and generally create a rich environment for human-AI collaboration.

Accomplished game designer Raph Koster has shared a 12-step programme to building game worlds that engage people and motivate them to invest so much of their time in exploring and mastering them. It’s a great read if you want to understand world-building, not just for game designers but business leaders in general, especially those want to get the most out of their teams.

Good game design produces a level of engagement, effort and problem solving that companies could only dream of in relation to their workforce. For example, after any popular game is released, we usually see a whirlwind of volunteer peer-to-peer knowledge work as people create a super-detailed guide and comprehensive learning resources to help other players within days of launch.

Is this kind of rich peer-to-peer collaboration possible in your organisation?