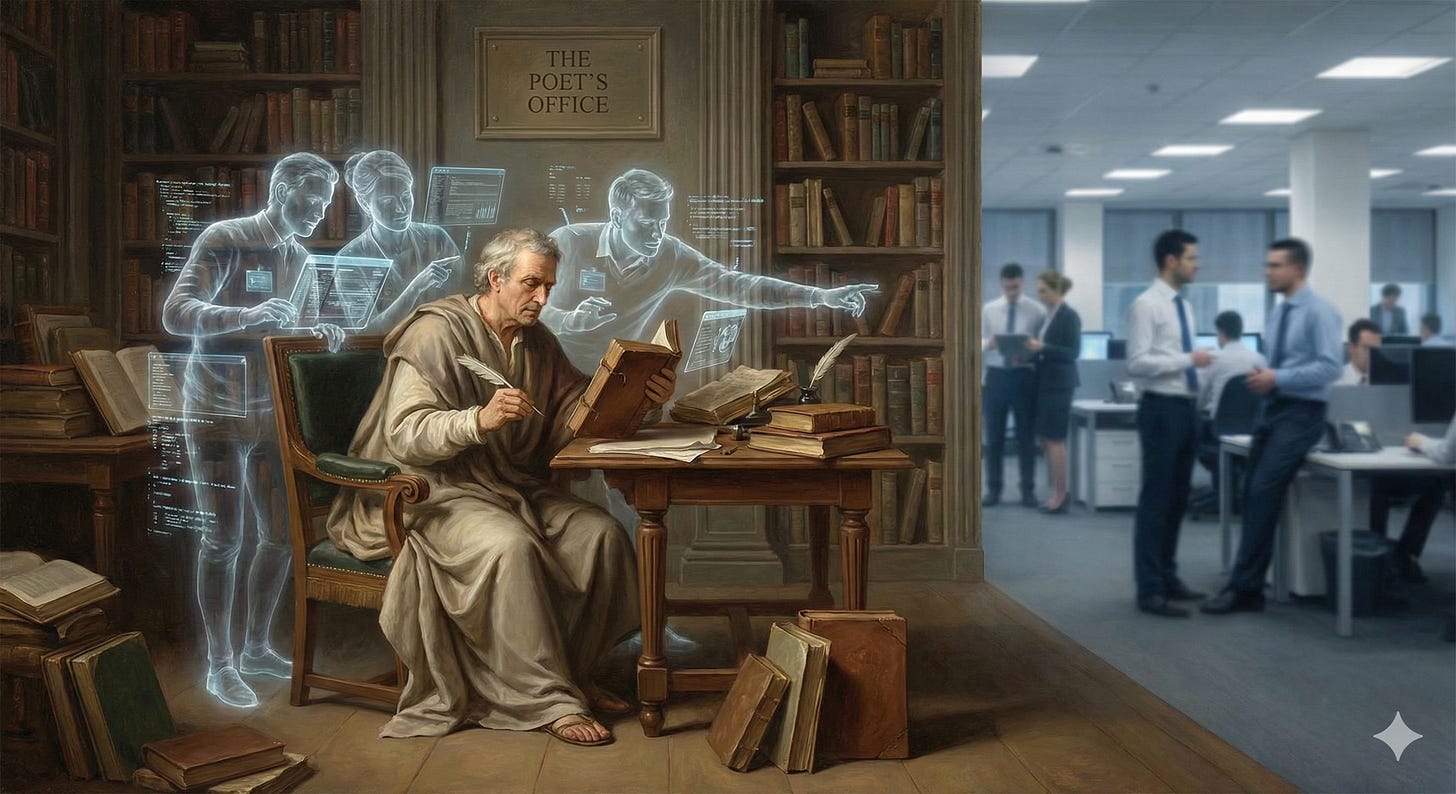

Metamorphoses: "Of Bodies Chang'd to Various Forms, I Sing"

New models, evidence of hyperproductivity, automated governance and the potential for leaders to guide the programmable organisation like poets

In the programmable organisation, leaders need to think less like bureaucrats and more like architects and coders to provide the context and instructions needed to operate smart systems.

Or perhaps poets?

Poetry and coding are both forms of language that act as compression technologies (like a zip file), leveraging a lot of symbolic pointers to invoke a great deal of meaning with just a few words. This is why some poems, songs or phrases can inspire social change or action. Words have power, as Billie Holiday’s Strange Fruit, The Internationale or even Yankee Doodle attest.

As we have written previously, once an organisation’s processes and workflows become addressable, they become programmable. And once we codify rules, guidelines and world lore, it is possible for simple agents to combine, operate and oversee these processes without going off the rails. Taken together, this will give leaders the ability to invoke complex combinations of actions with clarity of thought and goals, expressed in simple words.

But poetry and literature build on millennia of shared experience and the evolution of languages. So we have some work to do at the lower levels of the intelligence stack in our organisations to make this a reality. If more leaders had taken those pesky knowledge management nerds more seriously twenty years ago, they might be in a better state of AI readiness today ;-)

Wall Street’s Next Top Model

In AI news, we have seen a number of impressive new LLM releases recently, most of which have delivered noticeable marginal improvements in intelligence and efficiency, but without really taking us forward to a new frontier.

The big news was probably Google’s release of Gemini 3, which has impressed testers and early adopters. Coming alongside a new coding IDE, improved image generation capabilities and other new developments, this release contributed to a sense of Google achieving a dominant position in the AI sector.

Subsequently, OpenAI released GPT 5.1 Codex Max, focused on long-running coding tasks across multiple context windows, which seems like a good approach for agentic AI.

Also, not to be forgotten, Anthropic released Claude Opus 4.5 to consolidate its lead in coding models.

But there was also good progress in open models outside China, with Nathan Lambert profiling some of the open models being developed in the USA, such as the recently released OLMo3, which is approaching the performance of leading LLMs, but is also fully auditable, which is important for enterprises, especially those in regulated sectors.

As Azeem Azhar points out, aside from the safety and sovereignty questions, switching to open models could save vast amounts of money for enterprises who rarely need the most cutting edge general intelligence the frontier models offer:

Despite open models now achieving performance parity with closed models at ~6x lower cost, closed models still command 80% of the market. Enterprises are overpaying by billions for the perceived safety and ease of closed ecosystems. If this friction were removed, the market shift to open models would unlock an estimated $24.8 billion in additional consumer savings in 2025 alone.

But long-term, LLMs are not the only route to intelligence, and the commentary around Yann LeCun’s unexpected departure from Meta has reminded industry watchers that he is not the only person who does not believe scaling LLMs will achieve AGI, and we should look to other approaches such as world models and neuro-symbolic AI, which we have covered previously. Elon Musk has also been making typically cavalier claims about xAI’s advantage being its access to world model data from Tesla vehicles and … err … Twitter.

Enterprise AI Architecture Gaps

In the enterprise AI space, development is understandably a few steps behind the powers of the frontier models and ideas like programmable organisations and leaders as poets, but we are making progress nonetheless.

Constellation recently shared a handy round-up of questions enterprises are (or should be) asking about AI and its implications for their IT estate, such as whether agentic AI will kill off underwhelming, generic SaaS platforms. They also point out that even if agentic AI is just able to solve the seemingly eternal problem of enterprise search, it would be worth the price of entry:

Five years from now will we all say, ‘those LLMs turned out to be a kick ass enterprise search’? The more I use LLMs, the more I think their greatest contribution is perusing structured and unstructured data and surfacing it easily. LLMs clearly collapse the time spent on conducting searches and doing superficial research. Yes, LLMs will make stuff up, but they’re a great starting point. When combined with enterprise data and repositories that have been useless for years, LLMs are revamping the search game for companies. Suddenly, context engineering is a thing.

As with so many other questions where enterprise AI shows great potential for improving our organisations, architecture is the key, and therefore a priority for AI readiness efforts.

Neal Ford and Mark Richards shared an interesting piece last week about how agentic AI with MCP plumbing is demonstrating an ability to solve enterprise architecture problems and provide smart, adaptive oversight and even automation, all building on the idea of evolutionary architecture.

X as code (where X can be a wide variety of things) typically arises when the software development ecosystem reaches a certain level of maturity and automation. Teams tried for years to make infrastructure as code work, but it didn’t until tools such as Puppet and Chef came along that could enable that capability. The same is true with other “as code” initiatives (security, policy, and so on): The ecosystem needs to provide tools and frameworks to allow it to work. Now, with the combination of powerful fitness function libraries for a wide variety of platforms and ecosystem innovations such as MCP and agentic AI, architecture itself has enough support to join the “as code” communities.

Clearly this idea has potential for non-technical domains as well, such as making sense of competing or overlapping guidance frameworks and rulesets. In fact, as David Barry writes, in terms of AI governance, we will soon reach a point where ‘human in the loop’ approaches are unable to keep up and we need to develop hybrid AI/human governance and oversight methods.

In a similar vein, Wired report today that Amazon is successfully using teams of AI agents to hunt down and fix bugs deep within its codebases that might cause security issues.

This emerging area of agentic AI oversight, governance and monitoring will be an important focus for enterprise architecture as agentic AI advances. ServiceNow just announced a partnership with Microsoft to integrate its own AI controllers with Microsoft’s AI tools, such as the recently released Agent 365, which Redmond describes as a control plane for AI agents.

Do AI Workers Love Their Children Too?

Are AI agents a new class of employee - like workers - or are they just a smarter class of software tools? This question might seem like a purely semantic issue, but in fact it could have both design implications for the smart digital workplace and, as NVIDIA’s Jensen Huang argues, it might also shape how we see the economic potential of the AI market.

Tim O’Reilly argues that talk of agents as workers is overblown and could risk de-humanising the workplace:

As an entrepreneur or company executive, if you think of AI as a worker, you are more likely to use it to automate the things you or other companies already do. If you think of it as a tool, you will push your employees to use it to solve new and harder problems. If you present your own AI applications to your customers as a worker, you will have to figure out everything they want it to do. If you present it to your customers as a tool, they will find uses for it that you might never imagine.

The notion that AI is a worker, not a tool, can too easily continue the devaluation of human agency that has been the hallmark of regimented work (and for that matter, education, which prepares people for that regimented work) at least since the industrial revolution. In some sense, Huang’s comment is a reflection of our culture’s notion of most workers as components that do what they are told, with only limited agency. It is only by comparison with this kind of worker that today’s AI can be called a worker, rather than simply a very advanced tool.

It’s a good piece from somebody who has seen a lot of change and lived through (and contributed to) a few hype cycles.

We already have examples of digital worker platforms in the wild, and perhaps Huang is right that by creating an infinite pool of cheap workers, we can expand the global economy beyond what we imagine today. But I am with Tim on this.

However, the reason we favour the idea of centaur teams - high-agency people using teams of AI agents (as tools, not synthetic employees) to support their work - is largely because human creativity and ingenuity continues to surprise us.

Steve Newman recently shared an interesting article looking at real, existing examples of AI-enabled hyperproductivity:

…the new breed of hyperproductive teams have three things in common. They’re building lots of bespoke tools. They’re letting AIs do all of the direct work, reserving their own efforts to specify what should be done and to improve the tools. And they are achieving a compounding effect, using their tools to improve their tool-improving tools. The net effect is what I’m calling “hyperproductivity”

In the examples he cites, which are mostly limited to individual developers working on small projects, their human creativity and ‘hustle’ vibe that drives their imaginative use of existing AI tools is the secret sauce and the multiplier here, not just the tools themselves.

Can Leaders also be Hyperproductive?

Looping back to where we started, it is clear that there is a lot that leaders can learn from the behaviours and working methods of tech people, whether enterprise architects, developers or DevOps people running operations.

If you are curious and courageous enough to orchestrate some agents and tools, create some automated data and knowledge inputs, and perhaps use AI to help assemble these into a scaffold for your work, then you can create some of the conditions of hyperproductivity that Steve Newman described in his piece.

Operational leadership, like software, is at its base a series of instructions, but these are built on layers of orchestration and abstraction. The idea is that you have people and systems below you that know how to do the work, so that you can guide and instruct rather than micromanage. And when these components and processes become addressable and programmable, then a whole world of possibilities opens up.

Contracts and agreements are also like software, and we have seen the power of new models such as RenDanHeyi that are built around a web of mutual agreements and commitments. Smart contracts could be a feature of the way that agents cooperate and coordinate, so rather than just micromanaging agents with a series of if-this-then-that instructions, we might give them contracts and guidelines and let them work out the finer details.

If leaders can learn to operate this kind of smart, connected work system, establishing the right goals, context and guidance, then we could see the same kind of hyperproductivity. Some of the most advanced hi-tech equipment in the world today - e.g. F1 cars, fighter jets, FPV drones, remote surgery - is still incredibly sensitive to human creativity and skill, with people pushing the boundaries of what the tech can do.

May the best poet win!