Small Models, Real Change: How Leaders Can Use SLMs to Make AI Fit Their World

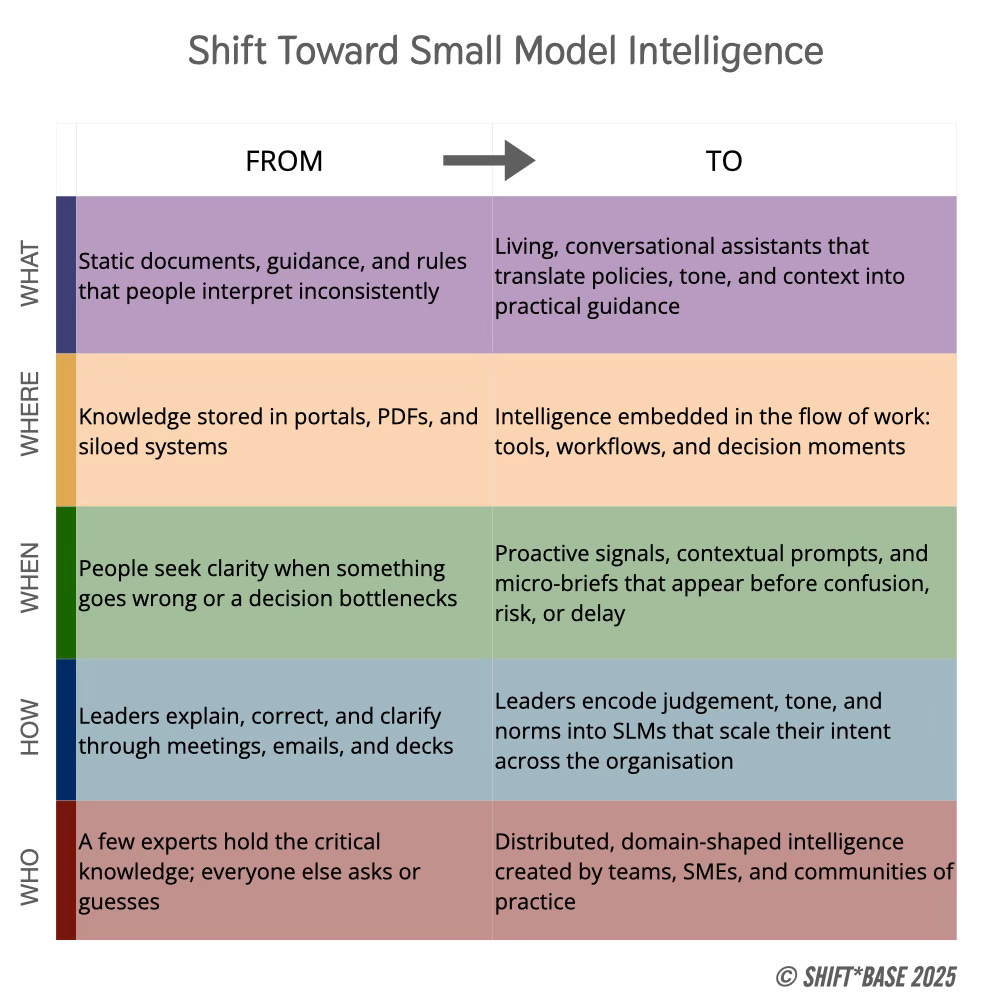

Why the next stage of AI adoption isn’t about going bigger, but about getting closer - to context, culture, and control.

Most leaders are being urged to adopt AI tools at scale, but too few are being shown how to make it fit their business. Most enterprise AI still involves general-purpose models that typically live in someone else’s cloud, know nothing of local context, and sound nothing like the company that’s using them.

This is why Small Language Models (SLMs) are becoming so interesting for business leaders - they’re compact, customisable, and, most importantly, trainable on your world. They have the ability to make AI more personal, more trustworthy, and more adaptable. Small enough to understand, to try, and reject if needed.

Whilst at least two-thirds of enterprise AI projects today involve LLMs in the cloud, we can expect a hybrid combination of locally-owned small models supplemented by generic cloud-based LLMs to become the principal direction of travel in the next year or so. The cloud may still be the entry point, but hybrid will be the destination: generic LLMs for broad intelligence, small private models for the work that truly matters.

That means now is exactly the right moment to explore what SLMs can add: focused, private models that solve real problems with more control over data security, tone, and local conditions. They’re one of the few AI technologies leaders can start using today while also preparing for the hybrid architectures that will define tomorrow.

What becomes possible using focused, private models to solve real problems with more control over data security, context and local conditions?

The Problem with Generic Intelligence

Many AI pilots run on foundation models built for everyone, and no-one in particular, aiming only for general productivity gains, without being clear about how to make them.

These models are fluent, capable, and endlessly creative, but of course they lack the context needed to create connected, meaningful outputs. They don’t know your business logic, your acronyms, your risk appetite, or your tone of voice.

That can make them impressive at first, but ultimately perhaps not reliable enough for some use cases. A general model can draft a report, summarise a document, or brainstorm ideas, but it can’t tell whether those ideas align with your policies, your market realities, or your brand. It’s intelligence without grounding, lacking accountability when it matters most.

For leaders, this creates an uncomfortable paradox: AI that’s powerful enough to change how work gets done, but generic enough to mislead, confuse, or dilute what makes your organisation distinct. Not to mention disrupt the finely tuned ways of working, communications flows and psychological safety of a high performing team.

As we work to move AI adoption from pilots and prototypes into daily operations, that lack of specificity could be costly. Early decisions made on partial understanding start to shape workflows, customer experiences, and even culture. Early adopters are discovering that general intelligence can’t carry organisational nuance, and that without local context, AI risks amplifying noise as well as insight. The next phase of adoption will also require a different kind of intelligence: smaller, focused, and tuned to the enterprise itself.

For leaders, this shift isn’t technical, it’s strategic. SLMs put agency back where it belongs: in the hands of those who understand the business best. They allow leaders to guide how intelligence is expressed inside the organisation, through its tone, its rules, and its judgement, rather than outsourcing that understanding to someone else’s model.

Use Case 1: Policy Assistant - Translating Principles into Everyday Practice

Policy interpretation is something every leader already does. It’s the constant translation of written principles into day-to-day judgement: “Does this meet our standard?” “Where’s the line between autonomy and compliance?”

A small, domain-tuned Policy Assistant helps leaders scale that judgement. Trained on your existing policy manuals, approval chains, and communications, it can answer policy questions in plain language, cite the relevant clause, and flag where interpretation might require escalation.

This isn’t a new capability, it’s an upgrade of a familiar process. It turns passive documents into active dialogue, giving teams quick clarity while giving leaders better visibility of where confusion, exceptions, or outdated rules live. Over time, these patterns help refine governance itself.

We are seeing this emerge in HR with cloud platforms that can ingest employee policies and make them queryable with a chatbot, but firms who take more control and combine all kinds of policy guidance, not just HR manuals, will be able to create more powerful assistants that understand all the angles from HR to compliance, regulatory environments and even internal best practice or guides.

Use Case 2: Knowledge Keeper - Capturing How Work Really Happens

Every team lead knows how easily expertise disappears. People move on, projects close, lessons fade, and with them go the small, hard-won details that make work run smoothly. Most knowledge management systems capture what was done, but rarely how or why.

A Knowledge Keeper model could change that. By training a small language model on the team’s own meeting notes, retrospectives, project reports, and wikis, leaders can create an assistant that remembers how things were actually achieved, the reasoning, context, and trade-offs behind decisions.

This is not just an efficiency gain; it’s a net-new capability: an institutional memory that talks back. It can explain the logic behind a past decision, show how a process evolved, or surface comparable patterns when teams face similar challenges. For leaders, it means knowledge becomes active again, less about archiving the past and more about accelerating the present.

Over time, a Knowledge Keeper can help spot repetition, duplication, and learning gaps across functions, turning the messy history of how the organisation learns into a valuable strategic asset.

Use Case 3: Decision Briefing - Faster, Context-Aware Judgement

Leaders already spend a huge amount of time making sense of information: condensing reports, comparing options, and preparing for decisions under time pressure. But most of this synthesis work still happens manually, scattered across inboxes, decks, and late-night note-taking.

A Decision Briefing model reframes that process. Trained on your organisation’s strategic language, KPIs, governance frameworks, key risks, and decision templates, a small, domain-tuned model can assemble short, context-rich briefs that surface what matters most.

Unlike a generic summariser, an SLM-based Decision Brief knows what good looks like here. It understands which metrics matter, which trade-offs recur, and which narratives resonate with your board or sponsors. It can pull insights from multiple systems, flag inconsistencies, and present information in the tone and structure that supports confident decision-making.

For leaders, this is an evolution of a familiar process. It transforms briefing from a time-consuming, reactive task into a fast, adaptive loop, giving leaders back time to think, while ensuring decisions remain anchored in shared context.

Use Case 4: Learning Companion - Embedding Culture in Learning

The challenge most corporate learning systems face is that they teach what to do, but not how we do it here. They’re generic by design, optimised for scale rather than nuance.

A Learning Companion built on a small, internal model can change that. By training it on leadership principles, case studies, onboarding materials, and real examples of decision-making, organisations can create learning assistants that speak in the company’s own voice.

Instead of serving abstract modules, the companion can coach employees through real situations:

“How would we apply our customer-first principle here?”

“What tone fits our communication style?”

“How do we balance speed with safety in this context?”

For leaders, this represents a translation of an existing process, embedding mentorship, feedback, and values-driven learning directly into daily workflows. It scales culture without diluting it, turning learning from an HR function into a living expression of leadership.

Over time, Learning Companions become mirrors of organisational maturity. The richer the examples and stories they contain, the clearer a picture they offer of how a company actually learns, and whether its principles hold up under pressure.

Use Case 5: Customer Context Partner - Bringing Human Insight Back Into Digital Interactions

Leaders always relied on customer data to guide decisions, but most of that data describes transactions, not relationships. Generic AI tools can analyse patterns or generate messages, but they rarely grasp what makes a customer trust your organisation.

A Customer Context Partner built on a small, domain-tuned model bridges that gap. Trained on your organisation’s customer conversations, service transcripts, brand guidelines, and satisfaction data, it acts as an interpreter between human intent and digital scale.

It can help teams craft responses that sound like your brand, summarise the real issues behind customer feedback, or highlight where sentiment is starting to drift. Unlike general-purpose assistants, it understands both the customer’s language and the company’s ethos, how to be empathetic within boundaries.

For leaders, this isn’t a new capability; it’s a smarter translation of what great service has always required, context, consistency, and care. It ensures every digital touchpoint reinforces trust and coherence, even as AI handles more of the interaction volume.

And because it learns from real examples, it becomes a tool for reflection too: revealing patterns of misunderstanding, tone drift, or policy friction that leadership can address upstream. In that way, it doesn’t just scale service, it helps leaders see the organisation through the customer’s eyes.

Why Small Models Matter

Across these examples, the pattern is the same: each one turns something leaders already care about into something AI can finally handle responsibly. The difference lies in where the intelligence lives.

Small Language Models (SLMs) change the balance of power. Because they’re trained on your data and run in your environment, they allow AI to reflect the business it serves rather than reshape it from the outside. They bring the benefits of generative AI (speed, synthesis, creativity), while staying grounded in organisational truth.

For leaders, this is the breakthrough: SLMs make AI governable, teachable, and trustable.

They give leaders a way to align AI’s intelligence with the organisation’s judgement — not by writing more policy documents, but by embedding context, tone, and values directly into the models that support daily work.

When AI speaks your language, follows your rules, and understands your purpose, it stops being a tool you adapt to, and starts becoming a capability you lead.

Small Has Been the Answer Before

Every major wave of digital transformation has followed a similar rhythm: we start by going big, central platforms, sweeping integrations, giant systems, and only later realise that progress depends on going small. In the past, it sometimes felt like the path of least resistance for organisations lacking in technical confidence was just to blow their budget on a ‘good enough’ one-size-fits-all SaaS purchase, rather than to create their own strategic digital business capabilities. Thankfully, that is changing.

When software eating the world led to sprawling monoliths, micro-services restored agility by breaking them down into smaller, interoperable components.

When web publishing concentrated power in a few portals, blogs and social tools brought it back to individuals and teams.

When cloud computing abstracted infrastructure into distant data centres, edge computing brought processing closer to where the data lives.

Each shift rebalanced power from centralised scale to distributed intelligence. Small systems proved to be the engines of speed, resilience, and ownership.

SLMs are part of that same pattern.

They do for AI what micro-services did for software: take something vast, opaque, and external, and make it modular and locally adaptable.

They give leaders a way to own intelligence the way they once learned to own data, applications, and experience by becoming smarter in their own space.

Now let’s consider what this means for change leadership as it relates to enterprise AI, and look at different paths to get started.