To Protect Human Agency, Codify the Invisible

Codified coordination, forkable practices, and agentic guardrails—how we design AI-ready work that puts people first.

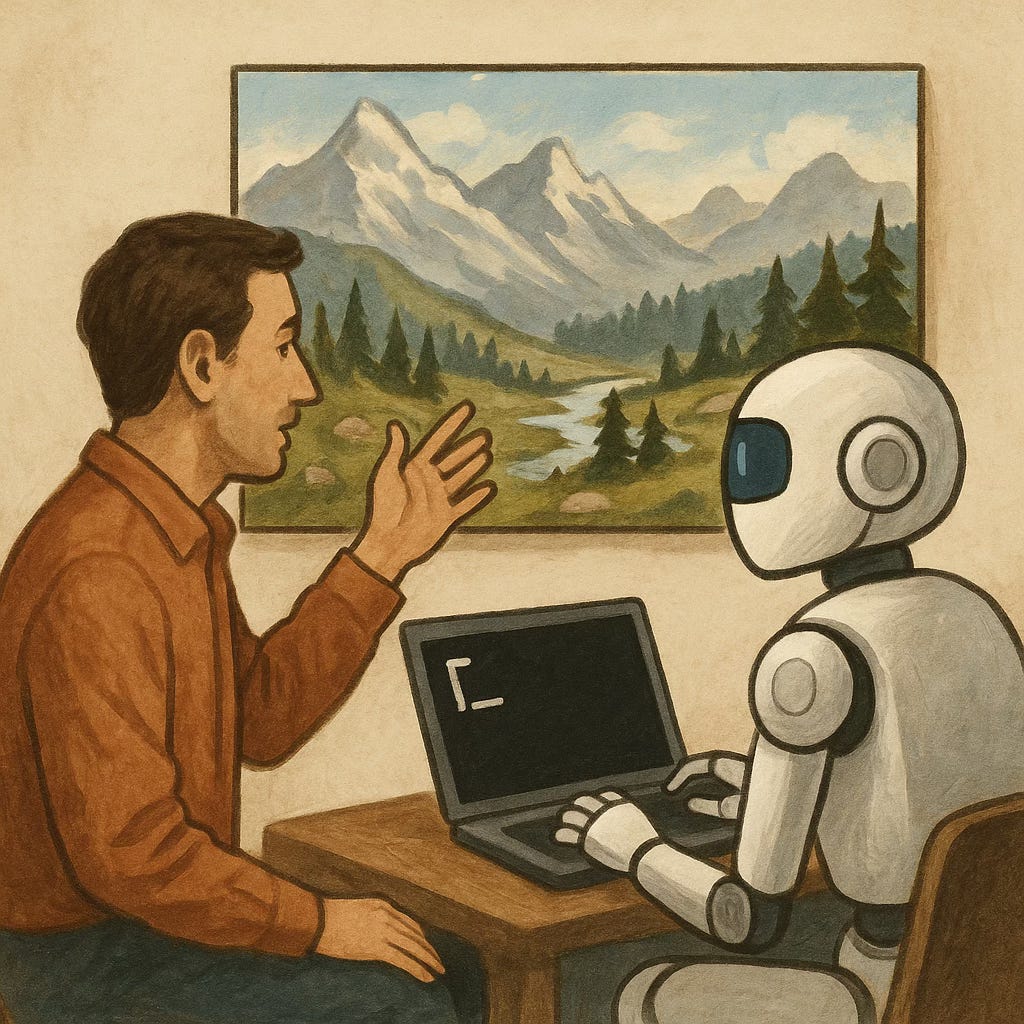

The best teams don’t run on formal processes. They run on trust, timing, shared intuition, and small acts of coordination that never show up on a workflow diagram. Much of what makes work effective happens between the lines - between roles, tools, messages, and moments. It’s rarely clean, often improvised, and deeply human. That’s what makes it effective. That’s also what makes it fragile.

As organisations explore how to bring AI into the heart of daily operations, the real challenge isn’t technical, but relational. How do we introduce intelligent systems into environments shaped by nuance, context, and human judgement? How do we ask agents to support work they can’t yet see?

In last week’s Shift*Academy newsletter, Lee made the case that enterprise AI offers a safer, more human-scaled path forward, precisely because it gives us the chance to shape the system intentionally:

“Enterprise AI gives us the chance to try out human-scale agentic AI and automation within safer, small world networks—and this is where we will really learn how to thrive alongside our bot buddies.”

This week, we go deeper, because to make that opportunity real, we will need to codify the invisible to some extent, not to constrain it, but to make it legible. Not to enforce rules, but to create space for collaboration, between humans and systems, teams and tools, values and actions.

Codification is often misunderstood as a move toward control. In reality, it can be an act of care. A way to honour how teams actually work. A way to protect human agency by ensuring that AI enters the system on human terms.

This edition explores how to do that, starting with charters, protocols, and forkable patterns that put people first.

The Problem with Tacit Coordination

Most organisations depend on what people know, assume, and figure out on the fly to make things work. From how teams actually work to how decisions are escalated, challenged, or quietly deferred, the real operating model is partly informal, distributed, and undocumented.

We call this tacit coordination - the invisible glue that holds work together.

It’s often effective. Teams adapt quickly, cut through red tape, and respond to nuance in ways no central process could anticipate. But it also comes at a cost. Tacit coordination depends heavily on personal experience, local knowledge, and social networks. When those change, someone leaves, a team reshuffles, or a new system is introduced, the whole structure can wobble.

This fragility could be a problem when you introduce agentic AI into the mix.

AI systems don’t intuit context the way humans do. They don’t learn how your team works by watching how you move between Slack, Notion, and a spreadsheet at 5pm on a Thursday. If you want AI to support coordination, decision-making, or execution, it needs a structure it can see, interpret, and follow.

Today, most of what makes a team productive is invisible to machines and that makes it hard to augment, hard to scale, and hard to improve safely.

This also means that if your early experiments with AI in the enterprise are bolted onto legacy systems or forced into process silos, this could reinforce bureaucracy instead of freeing people from it. Why? Because we haven’t yet translated the real work of coordination into a form AI can support.

To move forward, we need to stop treating codification as a top-down documentation exercise and start treating it as a team-level capability, a way to make the invisible visible, on our own terms, before someone else imposes a system we don’t control.

Ironically, this advantages some of the traditional, process-heavy organisations that have long been criticised for being rigid or bureaucratic. Think of large engineering firms or legacy industrial giants where coordination is engineered top-down, and everything from sign-offs to safety procedures is codified in central directives. These organisations may find it easier to plug in AI agents because their work is already legible to machines and there are clear rulesets to follow.

But what they gain in readiness, they risk losing in humanity. Their processes may be visible, but lacking in agility and creativity. Meanwhile, more community-driven, adaptive organisations - those shaped by trust, tacit know-how, and social coordination - may struggle to integrate AI, not because they are wrong, but because their ways of working are invisible by design.

The opportunity, then, is to take the best of both worlds: to codify work in a way that enables AI without compromising what makes work meaningful - baking both legibility and humanity into the operating system.

What Does Codified Coordination Really Mean?

To many leaders, the idea of “codifying” how teams work conjures images of stifling process manuals or rigid workflows. But that’s not the kind of codification we’re talking about here.

This isn’t about surveillance-driven automation, either. Some enterprise AI approaches rely on process discovery or process mining tools that use screen activity recordings to observe how users interact with systems, and then infer task flows for automation. These methods tend to capture only the mechanical steps, not the reasoning, judgement, or tacit understanding that shape how teams actually work, and while they might create marginal efficiencies for the old ways of working, they risks entrenching brittle workflows and reinforcing bureaucracy. In contrast, the approach we’re exploring starts with human sense-making and flexibility, designed to make coordination legible without losing its nuance.

Codified coordination isn’t about locking teams into fixed ways of working. It’s about making their actual coordination patterns visible and usable, by people, by systems, and increasingly, by AI agents.

At its simplest, it means identifying and expressing:

What gets done

Who decides

How coordination happens

What "good" looks like

When and how to escalate

That might take the form of:

A team charter with roles, rituals, and norms

An SOP that lays out how to complete a recurring task

A prompt chain that drives an AI assistant for a specific workflow

A decision tree that guides responses to common edge cases

An operational map that shows how work flows across tools and roles

The goal is not to reduce everything to a diagram, but to create a shared model of work that is legible and flexible. It should be:

Human-readable, so team members know what to expect

Agent-usable, so AI systems can assist, monitor, or act where appropriate

Explainable, so decisions and exceptions make sense in context

Mutable, so teams can change it as they learn

When a team’s coordination is codified just enough, it becomes possible to:

Onboard new members faster

Delegate repeatable work to AI agents

Improve processes based on real feedback

Reduce decision fatigue

Spot duplication or drift across the organisation

But codification is not the end goal. It’s the scaffolding that makes augmentation possible - if we design it to evolve with the team, not to constrain it.

This is how we keep humans at the centre of our Enterprise AI story: by giving them the power to shape, fork, and adapt the very systems that AI will support.

Fork it! Let’s do it our way

If codified coordination is the scaffolding for agentic AI, then forkability is part of what makes it human-scaled.

The default instinct in most large organisations is to codify from the centre: define standard ways of working, issue templates, roll out a methodology, publish a handbook. But while consistency has its place, it rarely survives contact with reality. Every team works in slightly different contexts. Every product, market, or function brings its own nuances. And in the best teams, those differences matter, they’re what drive learning, responsiveness, and performance.

That’s why the most effective coordination patterns are not handed down from above, they’re grown from the edge.

Teams are closest to the work. They hold the tacit knowledge of what actually happens, how exceptions are handled, what good really looks like in context. They are best placed to codify, not just follow, how coordination should work.

So rather than enforcing a single, brittle standard, we should offer base patterns and protocols that can be forked:

Start from a template - but make it yours

Adjust decision trees based on team roles and risk levels

Rewrite prompts and workflows to reflect the actual systems in play

Feed changes back to a central library so others can learn, remix, or improve

Don’t mistake this for chaos - it’s structured divergence. Think of it like Git for ways of working:

Fork a standard pattern

Adapt it locally

Curate the best changes

Keep the history

When you design for forkability, you don’t just make coordination clearer, you make it adaptable, explainable, and scalable. It allows agentic AI to function safely and effectively without flattening the human differences that make organisations resilient and creative.

And perhaps most importantly, it turns codification into a living, learning process, rather than a fixed artefact. Because what works today may not work tomorrow and in an AI-augmented organisation, the ability to evolve coordination is as important as the ability to describe it.

If we want AI to support meaningful work rather than distort it, we need to start with the layer where humans and systems meet - team charters.

Read on to see how this simple artefact can become the foundation of a truly human-centred, agent-ready organisation, and how to be consider the risks and trade-offs that we need to be aware of.