Who's the Bot Now?

More Enterprise AI investment is good, but without a vision that extends beyond the short-term trap of replacing people, we could end up in the bad place again...

The launch of ChatGPT 4.5 last week was not so much a ‘breakthrough’ release as an expensive incremental improvement or consolidation of existing capabilities before the hotly anticipated GPT5. Simon Willison has a good roundup of reactions and testing, including Andrej Karpathy’s amusing poll comparing GPT4 and GPT4.5 results, in which most respondents marked down 4.5 results despite them being ranked higher by himself and other experts in areas such as poetry writing and narrative flow.

We may yet experience the sublime comedy of an AGI emerging blinking into the light after burning through enough computing power to run a small planet, only to discover that people worship the equivalent of Douglas Adams’ Zaphod Beeblebrox and find know-it-all Marvin boring.

Who’s the Bot Now?

CIOs plan to focus more spending on enterprise AI strategy in 2025, according to Informa TechTarget's "2025 Technology Spending Intentions Survey", which ranked AI, data science and ML second among broad technology initiatives in terms of increased business importance, trailing only cybersecurity.

Good news for AI; however, it is worth repeating that the biggest opportunities, challenges and risks lie not in the technology domain, but in people and operations. Spending this money without asking what it means for how we organise people and work, and how we re-think, manage and coordinate value chains, risks failing to achieve the ROI firms should expect from AI investments.

Last week, we considered how learning needs to change to meet these challenges. But the wider questions around the role and agency of human workers in a mixed algorithmic / agentic digital workplace are also important, and right now companies appear to be all over the map on this issue.

Google co-founder Sergey Brin recently exhorted employees to work at least 60 hours per week (and by implication, also some weekends) to win the race for an AGI that could then replace much of their work.

Perhaps Google can become a highly optimised and automated adtech machine with little need for creativity or human relationships, but this will not be the case for most of the companies who are looking to AI to improve the way they work.

Meanwhile, as Azeem Azhar wrote last week, people are also starting to use AIs in a very human way, as a sounding board for provisional ideas, and as a thinking partner that can help bring them to fruition:

“Today, many people see AI as a tool primarily for automation, assuming it works best with structured, defined tasks. But the reality is quite different: AI is reshaping how we turn fuzzy intuitions into tangible, powerful ideas.

Your greatest notions are probably stuck in your head—too vague, too unstructured. Today’s AI will help you uncage them and turn them into reality.

Welcome to the world of the vibe worker.”

Following on from our newsletter covering the rise of super operators a couple of weeks ago, more examples of AI supporting high agency human creativity are emerging.

The New York Times recently wrote about how AI is changing the way Silicon Valley builds start-ups, sharing several examples of startups that are able to get from zero to one with lower (or even zero) staffing costs and more scope for being revenue positive early on, thanks to AI.

One implication of this is that venture capital loses some of its power over founders, who can spend less time running funding rounds and generally require less money to build out their ideas, in contrast to the previous scaling model where the risks were higher:

Bigger teams needed managers, more robust human resources and back office support. Those teams then needed specialized software, along with a bigger office with all the perks. And so on, which led start-ups to burn through cash and forced founders to constantly raise more money. Many start-ups from the funding boom of 2021 eventually downsized, shut down or scrambled to sell themselves.

And this is not just going to affect knowledge work and coding. Wired shared a story about a US manufacturer of steel balls - already a mostly automated production process - who are using Microsoft’s Factory Operations Agent to analyse data in real time, which allows workers to ask questions and gain deeper insights into how their processes are running. And by combining data from all the company’s factories around the world, the scope for identifying the impact of small differences in operations at different sites comes into play as well:

“[Conversational data access is] particularly valuable in manufacturing, where tracking down a set of errors might mean comparing data across quality assurance systems, HR software, and industrial control systems like kilns and precision drills. Within the industry, this is known as the IT/OT gap: the disconnect between information tech like spreadsheets and the operational tech that’s used in a factory. AI companies believe large language models like the Factory Operations Agent will be able to work across that gap, allowing it to answer basic troubleshooting questions in a conversational way.“

Looking Beyond Short-term People Replacement

Until we (presumably) de-couple work and jobs from economic survival, there will be a lot of worried employees looking at the success or failure of automation initiatives and asking what it means for them.

Kevin Kelly recently argued that we are at the beginning of a handoff of work from people to bots, just at the point where fertility rates look set to reduce population growth - he predicts a period of absolute decline starting in the next few decades. Kelly’s view of what this means is rather jarring:

Summary: Human populations will start to decrease globally in a few more decades. Thereafter fewer and few[er] humans will be alive to contribute labor and to consume what is made. However at the same historical moment as this decrease, we are creating millions of AIs and robots and agents, who could potentially not only generate new and old things, but also consume them as well, and to continue to grow the economy in a new and different way. This is a Economic Handoff, from those who are born to those who are made.

As with other big questions about human society, the answers will depend on whether we solve for human quality of life, aggregate economic output or ideological concepts such as “freedom”, faith or fealty to fiefdoms.

We have written about the positive vision for programmable organisations that are more human-centred than the old system of hierarchical process management. But this and other win-win visions for the relationship between people and AI are more complex and harder to achieve than, for example, Sergey Brin’s approach of asking workers to accelerate automating themselves out of a job, or other similarly short-term strategies.

It is unrealistic to expect any leader whose position and income are tied to near-term stock market performance to execute on a transition strategy that might take 3-5 years to play out. But there are plenty of organisations and leaders out there who take a longer-term view of their organisations and also the societies they operate within.

Vision Matters Even at this Early Stage

There are also plenty of people working to define the future of human collaboration and the way we organise work and value creation, and whatever our differences in focus or detail, I hope we can work together to shape a future that provides meaningful roles and opportunities for people to create things and solve problems, whilst reducing overall costs by orders of magnitude using AI and automation.

To end on an optimistic note, I would like to share two excellent presentations I enjoyed last week that help propel that positive vision forwards, and which both build on and inform some of our thinking.

Zoe Scaman’s The Agentic Era: A.I.'s Ascendance From Oracle To Operator is a lovely summary of the shift we are seeing, but which also spells out the promise and the peril of AI:

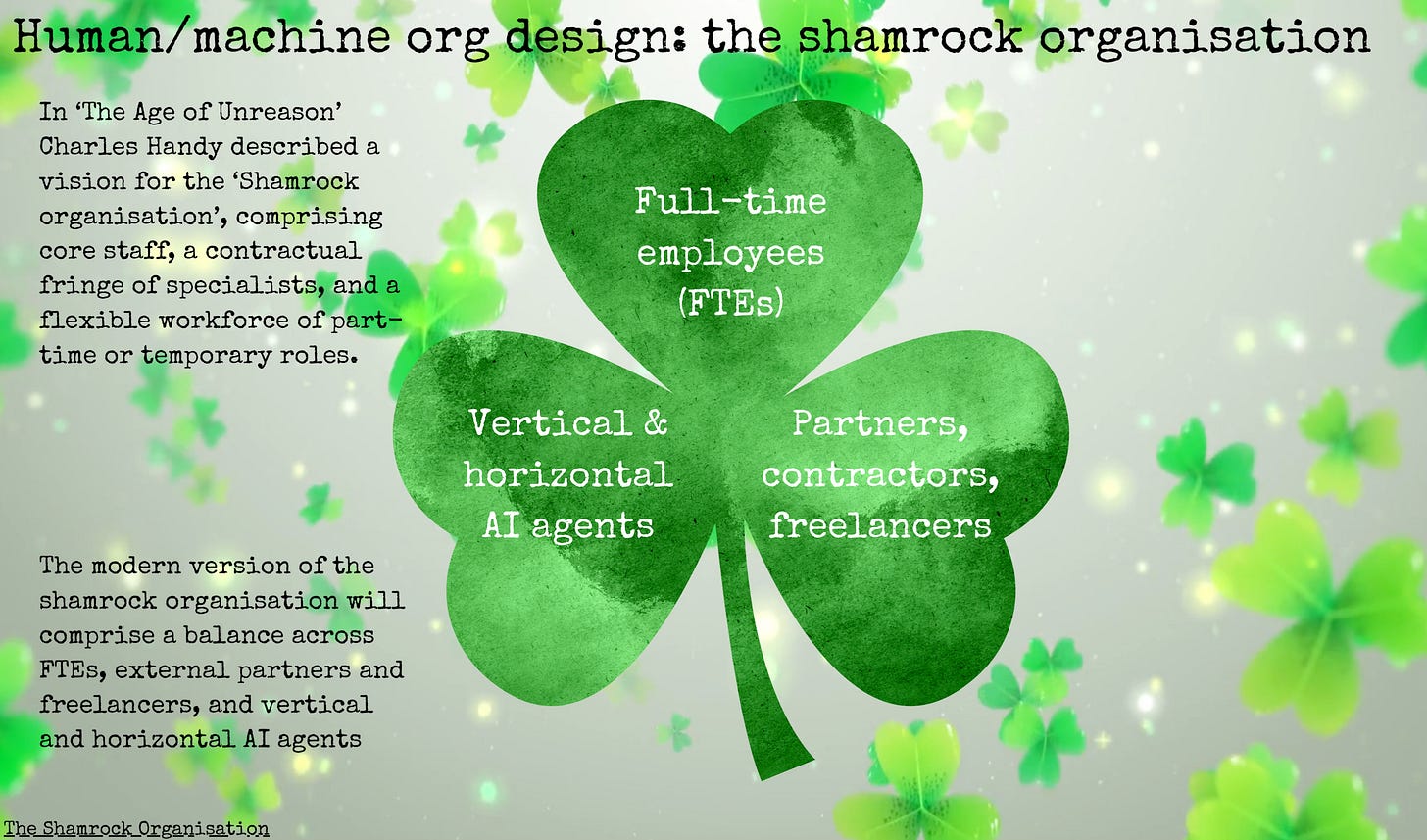

Neil Perkin’s The Agentic Organisation: How AI agents are set to change everything, and what to do about itis another worthwhile contribution to the debate, focusing more on how on we get there, with a modern update to Charles Handy’s notion of the Shamrock Organisation and a lovely quote from Rohan Tambyrajah: ‘What can’t be automated should be elevated’