From prompt whispering to model sommeliers: how best to think about the ‘atomic’ nature of LLMs in the AI evolution story?

Lots going on in AI-land as usual, but what can recent developments tell us about how to build on the capabilities of LLMs, and what should execs be asking?

The controversial investor Chamath Palihapitiya last year re-told a story about the rise of refrigeration that concluded it was not the fridge makers who captured the value their invention created, but firms like Coca-Cola who used the technology to build a global sales and logistics operation.

If LLM are the fridges, who will find the business models to exploit them in a similar way?

The Spreadsheet Adoption Cycle

Benedict Evans recently shared a thoughtful piece about AI adoption that considers two key questions: (1) where can we find compelling use cases outside of coding; and, (2) can they be addressed directly by generalist LLMs, or do we need to wrap them up with other components within single-purpose apps?

He cites the example of the spreadsheet, whose invention was equally impactful as the first transformer-based AIs (and fridges!), but also needed time to overcome old habits and a lack of understanding about how to use the new tool as widely as we do today. He presents two problems with the idea that LLMs can ultimately do it all:

The narrow problem, and perhaps the ‘weak’ problem, is that these models aren’t quite good enough, yet...

The deeper problem, I think, is that no matter how good the tech is, you have to think of the use-case. You have to see it. You have to notice something you spend a lot of time doing and realise that it could be automated with a tool like this.

Some of this is about imagination, and familiarity. It reminds me a little of the early days of Google, when we were so used to hand-crafting our solutions to problems that it took time to realise that you could ‘just Google that’. Indeed, there were even books on how to use Google, just as today there are long essays and videos on how to learn ‘prompt engineering.’

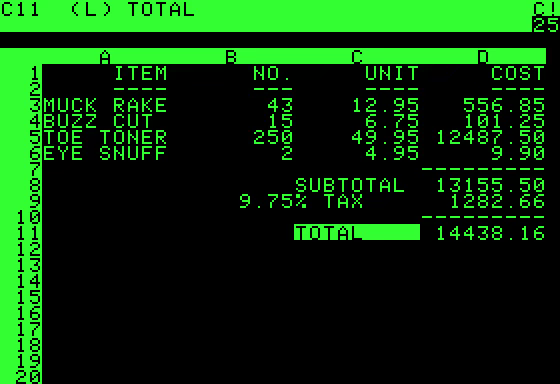

The spreadsheet is a great example of an all-purpose tool that is so easy and useful that it risks crowding out other more specific tools, to the extent that people often joke about how much critical infrastructure probably depends on a spreadsheet running on an old, forgotten machine.

Diginomica this week shared some examples of professional services firms wrestling with data transformation and automation. Within this sector, accounting firms are a great example of what should be a very easy path to AI adoption and automation. Accounting firms are literally made of algorithms - in the guise of thousands of spreadsheet calculators and formulae - with a social pyramid of partners and advisors on top.

In theory, it should be fairly straightforward to digitally transform a large accounting firm. But, like law firms, the whole socio-political structure holding back change is perversely incentivised to be inefficient, partly because of the way the partnership model works, but most of all because of the way they bill their customers (typically by the hour, not by outcomes). So, whilst it took spreadsheets longer than we might imagine to achieve dominance in business, it might take just as long to replace them.

Are LLMs the atoms, organs or creatures in the AI evolution story?

I was intrigued this week by reports of a new primary endosymbiosis: an algae appears to have permanently absorbed a cyanobacterium giving it the ability to fix nitrogen. The last known example of this happening is what gave plants the ability to photosynthesise.

Evolution in a complex ecosystem is messy, complex and unpredictable, but it is based on the simplicity of individual agents pursuing their own fitness function to overcome predatory threats and environmental constraints. Atoms join together in cells, which form tissue and organs that are the sub-systems of organisms, which then form populations that shape the overall biosphere.

What are LLMs in this metaphor?

Mustafa Suleyman, recently acqui-hired by Microsoft, believes AIs are a new species of being that exist to enhance our limitless creative potential:

“AI is to the mind what nuclear fusion is to energy,” he said. “Limitless. Abundant. World-changing.”

But should we treat them as magic creatures that promise wonder and delight, or are they better thought of as building blocks of more developed organisms that include other forms of automation, logic and reasoning? And how can we accelerate their evolution in the real world, rather than in the lab where they are fed internet-scraped (or synthetic) data like a massive fish farm?

Mark Zuckerberg announced last week that Meta’s Llama 3 LLM was being released into the wild as an open source model that anyone can improve and extend. This was regarded by some analysts as a defensive strategic move to avoid the ‘closed’ OpenAI and other LLMs creating a winner-takes-all market, but it will also accelerate the model’s evolution. As Ben Thompson put it:

Meta, in other words, is not taking some sort of philosophical stand: rather, they are clear-eyed about what their market is (time and attention), and their core differentiation (horizontal services that capture more time and attention than anyone); everything that matters in pursuit of that market and maintenance of that differentiation is worth investing in, and if “openness” means that investment goes further or performs better or handicaps a competitor, then Meta will be open.

Reasoning versus prediction and learning in the real world

As I wrote in a previous edition, probabilistic inference will get us so far, but AIs need a model of the world - and testable hypotheses - if they are to do anything approaching reasoning.

So how can they learn in the real world?

Tesla’s so-called Full Self Driving is an interesting case in point, as it switched from a rules-based system to a full neural net approach in advance of the current version (FSD 12), which means it crunches vast amounts of user driving data to learn how real people drive in real situations, rather than just following the rules of the road. Tesla, or rather Elon Musk, is betting the farm on the company being valued as an AI leader centred on FSD rather than as a car company, and got a boost this week with regulatory approval in China.

It is interesting how little credit FSD 12 gets for its truly remarkable abilities in most cases, and its safety take-overs when it sees danger the driver can’t see, in contrast to the understandable criticism when FSD gets it wrong. It is unclear if “true” self-driving is realistically possible in all scenarios, or even desirable, but Musk will soon start running out of road to fulfil his very public (and long overdue) promises on this.

FSD is currently a supervised AI that leaves human drivers ultimately accountable, and perhaps it will never truly achieve a fully unsupervised mode as Musk hopes - but I wouldn’t bet on it either way at this point.

Perhaps we can find some less safety-critical use cases where supervised models can learn about the real world, and ideally where a degree of fuzziness is OK?

Fuzzy use cases and LLM sommeliers

HBR published a piece this week looking at using LLMs and AI to learn more from customer interactions with companies, rather than the usual go-to use case of chatbots doing first-line customer service on the cheap. In the article, Thomas Davenport et al cited some real world examples where this seems to be paying off:

Some companies are now using GenAI to summarize conversations with customers. According to Nimish Panchmatia, Chief Data and Transformation Officer at DBS Bank (a long-term research site of Davenport’s), a GenAI-powered virtual assistant performs call transcription, summarization, and recommendations for action. It’s been live for several months with new capabilities progressively being launched. Call center agents are seeing savings of around 20% on their call handling time. The next stage to go live is the GenAI recommending answers/solutions in real time to the agent to decide whether to use with the customer. The primary reason for the system was to increase customer call quality as opposed to reducing employment levels.

This might be the kind of use case where predictive fuzziness is not a big problem, and perhaps it could also showcase other GenAI skills such as developing dynamic customer personas to bring its insights to life for designers, product people and customer service reps.

I think we need these kind of real world use cases to truly put LLMs through their paces and find actionable use cases where we can get started combining and building out the other pieces of the puzzle that will be needed to create smart services and higher order apps.

Elsewhere, Dion Hinchcliffe shared his thoughts this week on how enterprises can start to understand the different models available - big, small, general, and specialised - and where to use them. So in addition to prompt whisperers, perhaps we also need LLM sommeliers to guide us through the pairing choices that make sense for the dishes we are trying to create.

But for now, at least in the enterprise, we have barely explored the meal deal menu of simple co-pilot use cases that Microsoft tenants are capable of, so perhaps we start there?

Quick questions to take away

For CEOs: how are you identifying the core business capabilities AI can help you develop and focusing investment on the most promising medium-term use cases?

For CIOs: how can you organise and categorise your available AI models and help the wider organisation understand which to build on for different types of AI or automation services?

For CHROs: how can you help solve the ‘spreadsheet adoption’ challenge and help employees understand what’s in it for them to take advantage of co-pilots and automation?

For team and function leads: how are you trawling for use cases where fuzziness is not a risk, and where we can give AIs an opportunity to learn more about what our teams do and why?

One of the best ways to accelerate experimentation and learning in this area is the intersection between low-/no-code tools and LLM prompting, so we will deep dive into that particular capability area in next week’s edition.

Brilliant!

So often with the introduction of a new technology, we seek to use it to do something we’ve already been doing but faster or cheaper or more easily. It may take a human generation before that technology is used for purposes that were previously inconceivable.